Why use an inertial navigation system (INS) with a LiDAR?

What is LiDAR?

Light detection and ranging (LiDAR) technology is a technology used widely in the surveying community to collect high precision three-dimensional (3D) survey data. This generic description of a LiDAR’s mechanism also applies to another commonly used term, laser scanning, and so in the following descriptions these terms will mostly be used interchangeably. LiDAR systems collect data by emitting many thousands of individual pulses of light per second and calculating the time that it takes for a pulse of light to return to the LiDAR sensor. These pulses of light are emitted across a swath, known as a scan line. Since the speed of light is known, the distance of each pulse to its target (for example, the ground) can be calculated. Depending upon the type of LiDAR used and how far the ground or structure being measured is from the LiDAR sensor, these individual data points can be spaced millimetres apart from each other. It is common to have a confidence that each data point has been located to a tolerance of between +/- 5mm to 10mm relative to the position of the LiDAR sensor.

In addition to accuracy and precision characteristics of the data that are collected, LiDAR sensors provide efficiencies in spatial data collection as they are able to do direct georeferencing to assign geographic coordinates to each of the data points collected by the LiDAR, without the need for surveyed ground control.

What happens when the LiDAR sensor moves position?

Terrestrial LiDAR survey systems are most commonly mounted on stationary tripods. To improve survey efficiency on large sites, they can be mounted on moving platforms travelling at speeds ranging from walking-pace up to the speed of an airplane. Increasingly LiDAR sensors are seen mounted on platforms ranging from backpacks or trolleys that are worn or pushed by surveying personnel, to cars, trucks and trains; through to unmanned aerial vehicles (UAVs), and of course manned helicopters and planes too.

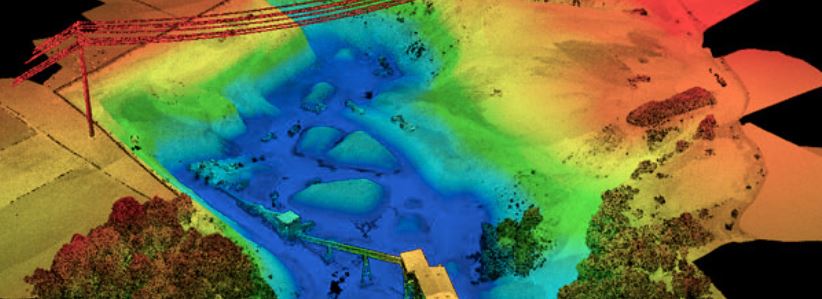

Due to the density and relative accuracy of each of the 3D measurements taken by the LiDAR, it is often said that a LiDAR ‘point cloud’ provides a user with one of the best survey datasets. To be most useful, real-world geographic coordinates will be assigned to each data point in the LiDAR point cloud, so that the data collected by the LiDAR can be used in conjunction with existing mapping or survey information.

Assigning a geographic coordinate to a LiDAR data point requires knowledge of where the LiDAR sensor is, and what direction it is pointing (exterior orientation) at all times. Based upon these measurements, a real world geographic coordinate is calculated and dynamically assigned to each the pulse returns (a process known as direct georeferencing). Given the high precision of LiDAR data relative to the position of the LiDAR sensor, together with the high frequency and volume of data that are being collected every second, the method of calculating the position and orientation of the platform needs to be equally sophisticated. This can be a challenge depending upon the limitations in reference information and the environment around the platform during the survey.

How can the position and orientation of a LiDAR sensor be measured?

Position

When undertaking a moving LiDAR survey, it could be assumed that utilising a global navigation satellite system (GNSS) sensor such as a global position system (GPS) receiver to assign geographic coordinates to the data collected, would be sufficient. While sufficient for providing a location for the sensor position in static scanning applications, it is not sufficient in a mobile context leading to inaccuracies arising from the sensor being in continuous motion while scanning.

More specifically, issues related to the use of GPS include those associated with the GPS receiver needing to have a clean line of sight to at least four GNSS at any one time to obtain longitude, latitude and altitude coordinates. In addition, the overall distribution of GNSS satellites above a particular portion of the earth at any one time may not lead to a clear line of sight. The extent to which location coordinates can be acquired will also depend upon whether structures in the environment occlude the receiver from clearly seeing the sky, and so tall buildings or overhanging trees can cause outages in the extent to which a GPS can calculate its position. This problem can be even more acute when deploying a ground-based mobile LiDAR implementation, as vehicles often travel through GPS deprived areas such as urban centers or forests.

Effects of the immediate environment aside, even if the required number of satellites can be seen, the frequency at which location measurements are recorded from a GPS will be far slower than the frequency at which data is being collected by the LiDAR sensor. As a result, there is a need to understand what the location of the survey platform is at times where GPS readings are unavailable. The LiDAR sensor positioning system to be able to measure, or predict where the vehicle has traveled in between GPS readings that are received. Sources of error in location calculations resulting from the effects of the environment of frequencies at which location readings are recorded in relation to the speed of the moving platform, can accumulate and lead to an increasing error budget over a project that is commonly known as ‘drift’.

Orientation

Regardless of the platform that the LiDAR is mounted (i.e. airborne or ground based), additional to accurate sensor location, it is essential to understand the system’s orientation in order to accurately understand the position of the data being collected. This exterior orientation of the sensor needs to be continuously calculated while the platform is in motion. Aside from straight line distance between data points, its movement needs to be described in terms of the roll, pitch and yaw. These types of motions might be familiar if visualizing a plane in flight, but anyone who has driven down a road or driven too fast round a corner will also be familiar with these motions. Due to the granularity of detail that a LiDAR captures in its data, any slight departure from a platform being completely level, will have an effect on the calculation of spatial coordinate assigned to a LiDAR data point.

It is also important to know if the platform has accelerated or decelerated, or if the passage of motion of the LiDAR platform has been completely linear. Again, due to the frequency and resolution of a LiDAR sensor, these changes of motion need to be accounted for in order to understand where the point cloud has been collected.

The hardware component that provides much of the information related to the dynamics of the platform’s motion is the inertial measurement unit (IMU). Comprised of an assembly of gyroscopes and accelerometers, the IMU will provide a continuous stream of data related to the linear acceleration of the vehicle on three axes, together with the three sets of rotation parameters of roll, pitch and yaw.

Direct Georeferencing using an Inertial Navigation System

The inertial navigation system (INS) is the computational system that houses an IMU, together with a processing unit that applies statistical (Kalman) filters to calculate a best estimate of position for the moving platform through its journey. If a GPS system is available, the INS will include data from the GPS in the estimate of position; if the LiDAR system has been mounted on a road vehicle that includes an odometer system for assisting with the measurement of distance traveled, then this data will be included in the INS calculations too.

Through simultaneously considering all methods of position and orientation information at once, the INS is able to compensate for deficiencies in these data when calculating position and orientation. For example, since there can be frequent outages in GPS data and potential to ‘drift’, the INS is able to apply a process of dead reckoning to predict the platform’s path on its predicted trajectory through adding extra weight to information from an odometer (where available) or the accelerometers from the IMU as appropriate.

With update rates of up to 250Hz, INS systems such as the OxTS xNAV550 and OxTS Inertial+, operate combined forward-and-backward processing routines (in time) to calculate the overall most likely position of the moving platform and the LiDAR sensor mounted upon it. It is this dynamic process of continuously calculating the best estimate of position and orientation of the LiDAR sensor and consequently each individual data point that it collects that is known as direct georeferencing.

LiDAR implementations using Inertial Navigation Systems

OxTS inertial technology has been leveraged in a range of high-precision LiDAR implementations on a range of platforms including vehicle-based, and both manned and unmanned aerial systems.

Swiss UAV firm Aeroscout has successfully implemented the xNAV550 with the Riegl VUX-1 LiDAR system to undertake powerline mapping operations. Through a deep yet simple integration between the INS and LiDAR sensor, the data collection and processing workflow takes just a few clicks. Within 14 minutes of flight time Aeroscout are able to collect LiDAR data of 1 km of high voltage powerline to an overall spatial accuracy of 1.6 cm.

In Australia, HAWCS operate an OxTS Inertial+ system to calculate the exterior orientation and provide direct georeferencing of a helicopter mounted LiDAR system. Collecting data over hundreds of kilometres on a daily basis, the HAWCS team are able to collect data that measures vegetation clearances along powerlines to within 20 cm.

Meanwhile for highway surveys, Swedish firm WSP has deployed the OxTS Inertial+ INS system on multiple GeoTracker systems since 2010. WSP rely on the Inertial+ system to reduce GPS drift rates and utilize odometer calculations that have been optimised by OxTS, to increase positional accuracy when obstructions such as bridges, tunnels or dense urban canyons occur. Providing a single synchronisation mechanism to simultaneously directly georeference data from LiDAR sensors, with information collected by 360 cameras and HD video; the OxTS Inertial+ INS is invaluable for this type of vehicle-based mobile mapping application.